When run this script on crontab, it works pretty well except for uploading tar ball to google-drive, though whole script works perfectly when run the script manually. Gdrive upload -p `cat $backup/dirID` $backup/`date -date '15 hour ago' +%y%m%d_%a_%H`.tar.gz

Gdrive upload -p `cat $backup/dirID` $backup/$filename #upload backup file using gdrive under the path written in dirID Tar -g $backup/snapshot -czpf $backup/$filename / -exclude=/tmp/* -exclude=/mnt/* -exclude=/media/* -exclude=/proc/* -exclude=/lost+found/* -exclude=/sys/* -exclude=/local_share/backup/* -exclude=/home/* \ Gdrive mkdir -p $gdriveID `date +%y%m` > $backup/dir Rm -rf $backuproot/`date -date '1 year ago' +%y%m`Įcho $backuproot/`date +%y%m` > $backuproot/nf #Save directory name for this period in nf #Start a new backup period at January first and June first.

GdriveID="blablabla" #This argument should be manually substituted to google-drive directory ID for each server. #Program: arklab backup script version 2.0

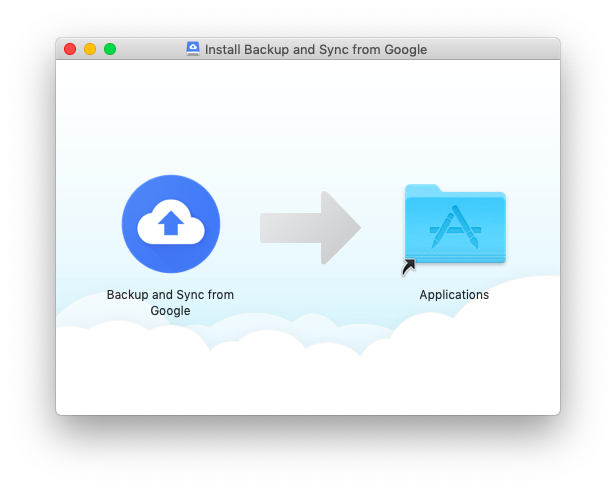

#UPDATE GOOGLE DRIVE MAC NOT WORKING ARCHIVE#

Whole directories under root are bound into tar.gz twice a day(3AM, and 12AM), and this archive is going to be uploaded to google-drive using gdrive app.

0 kommentar(er)

0 kommentar(er)